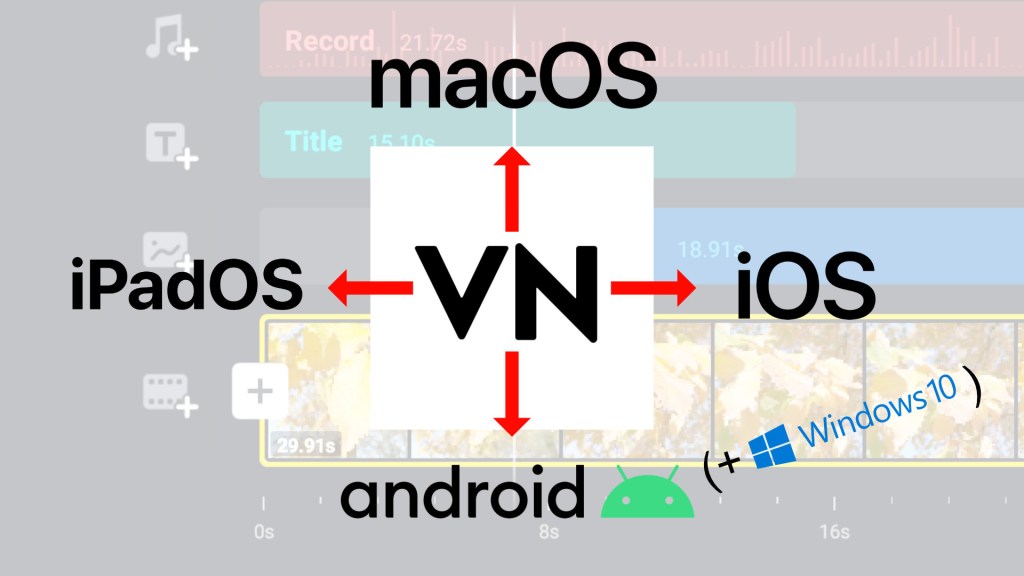

If you want to learn about how smartphones and other compact mobile cameras can be powerful and fascinating tools for videography, you have come to the right place! I’m covering a variety of aspects on this topic including mobile devices/cameras, operating systems, apps, accessories and the art of mobile videography, particularly what I like to call “phoneography”. This knowledge can be very useful for a whole range of professional and/or hobby videography enthusiasts and visual storytellers: mobile journalists, smart(phone) filmmakers, vloggers, YouTubers, social media content creators, business or NGO marketing experts, teachers, educators or hey, even if you’re “just” doing home movies for your family! Your phone is a mighty media production power house, learn how to unleash and wield it, right here on smartfilming.blog!

Feel free to connect with me on other platforms like Mastodon, Twitter, YouTube or Telegram (click on the icons):

For a complete list of all my blog articles, click here.

To get in touch via eMail, click here.

To donate to this cost & ad-free blog via PayPal, click here.

Recent Comments